Lethal Cognitive Distortions

Here are a few ways to wade through those cognitive distortions while building products…

If you have floated surveys & analyzed those responses over your research, you totally understand how gruesome the effort could be, more so over products that are touted to become potential game changers in the future. One could experience a degree of terseness over those responses from surveys. And those skewed responses filed with anomalies although not that obvious could creep in & get carried through, evoking a unanimous decision to advance to the next stage of the PLC. And naturally, many teams take the indigenous research route in spite of top-notch data being available in the market although that could come at a cost, considered pretty steep for most orgs. & more so the start-ups. What’s next? Teams would then be forced to blindly swallow those survey results hook, line & sinker given the amount of effort, time & investment at stake.

As Aaron T Beck of the University of Pennsylvania has described it in his work – CBT (Cognitive Behavioral Therapy) illogical distortion of thought is a pretty common trait amongst the ones who are dealing with some kind of stress & happen to carry a negative outlook to the outside world in general & their own lives in particular.

Now, here’s a question. Who is not going through stress given 2025?

Not too many are on that list there

So, given that STAT, what’s the probability that these cognitive distortions don’t affect survey responses?

That is NEARLY ZERO

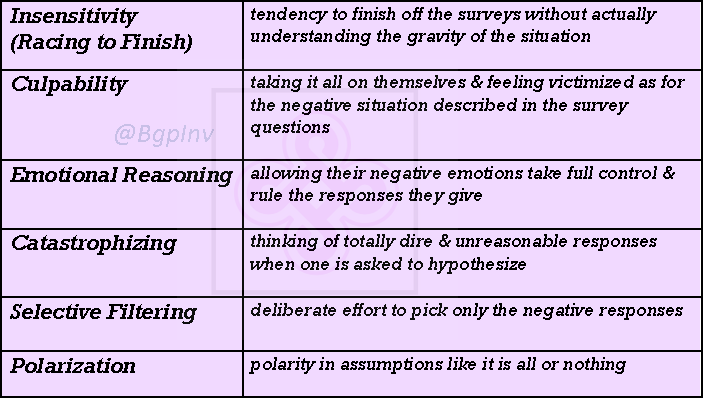

Here are a few examples of such cognitive distortions:

Put 2 & 2 together, you would realize that it could be pretty scary to build something purely based on surveys as the responses could be filled with a whole lot of bias & may not even qualify as a far-fetched representation of the actual truth (let alone finding perfect alignment to the market voice) thus making it one of the easiest ways to flush dollars down the drain. This problem calls for a systemic change in gauging the VoM (voice of the market) & tweak a few age-old processes so as to extract just the right quantum of insight aiding teams towards building the right thing, always.

Mitigation

Over the years having to deal with the user groups across the stages of the PLC, picking their minds, getting the drift of their thinking, capturing their emotions & motivations I have realized one thing for sure. There could be quite a visible gap between what the users say / believe & what the reality actually represents. One important reason this happens is most users may not have a thorough knowledge & could be caught unaware in spite of having accumulated years of work experience in that very given domain.

For ex: if one is building an advanced Fintech App that could aid the workflow of those analysts & consultants at investment banks it is very common a perception to believe that the incumbent who would qualify into the net of the sample space knows quite a bit around the subject of investments in particular & finance in general. But the bitter truth is a consultant although possessing a certain qualification from a certain school of repute could still be unaware of a lot of things within the subject because it could be quite a long way away from their regular day’s workflow.

Beware, this is not about the TAM. One could still qualify as an integral part of your future user base but still stand under-qualified as they may not have enough information needed as a prerequisite to participate in a survey. So, popping the questionnaire to such people & winning their participation in the survey could distort things than help resolve them, blame it on the very assumption that someone who is working at a certain place or an org. ought to be aware of some (x) process or some nuanced part of the domain.

But there are a few simple & straightforward ways to mitigate these problems that are a resultant of cognitive distortion.

Here are a few things you can do:

1) Participant Selection

One of the preliminary & main questions one ought to pop is “who is an ideal participant as for this survey given my product idea / the next feature?”. And the answer is NOT the entire target market of course as one can’t capture the voice of a million users which quite frankly isn’t needed either. The TAM which is your major sample space once established ought to lead to some sort of segregation, sorting & ordering / ranking, earmarking them groups / individuals that are essentially a subset of that major sample space but happen to be prime & qualify as a super-fit as for pushing surveys & capturing the responses.

Surveys in the recent past have changed a lot in that sense. I put it to you (my reader). Hark back at the number of surveys you have taken (I am assuming that number could be somewhere between 20 – 100) thus far. Most of them as you notice dive right into it from question 1. They may start popping nuanced or detailed questions that implore you to dig in deep & get back or forth in time to a certain memory to be able to place yourself in the situation & answer the question being asked.

As of today, it is not about the quantity <number of participants> but it is about whether or not the incumbent qualifies as a participant in the first place. Just because someone’s qualification reads MBA / PGP in Finance on paper & is employed at an investment bank, one can’t get into an assumption that they would be well aware of the process followed around SWIFT codes / clearance of checks / the functioning of an ACH (automated clearing house). That’s purely a corporate banking operation. Only people with experience in those areas would “carry working knowledge” or have “nuanced understanding of the operations” which in itself are 2 different things entirely.

Some questions you ought to have answers to at this stage are:

Who is my survey participant?

Are their qualifications important?

How much expertise do they carry in the given area?

Would that experience help them qualify as a participant?

What would the course of action be in case they don’t qualify?

Eliminating participants based on a few initial questions is a great way to approach this problem. That way you are guaranteed of your survey responses being more legitimate & closely representing the real voice of your target market.

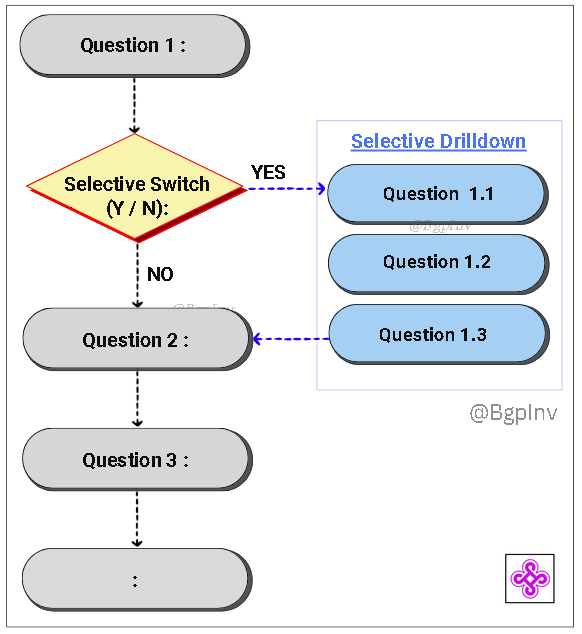

2) Selective Drilldown

It is always tricky designing these surveys as one ought to be able to keep it short & simple towards wrapping things up quickly whilst also delving deep into the nuances & hitting those important areas that matter. It could be a great idea to selectively open up a few extra questions that are dynamically triggered via the responses a participant chooses over those initial questions.

The whole idea of a survey is to capture the information that’s deemed mandatory & necessary. What’s the point of leaving the questionnaire at a higher level of abstraction & then getting into some inaccurate assumptions when you could take the liberty of an extra minute or two to actually push it deeper towards getting a more detailed perspective of the user’s lives. That could also help you unearth a whole new side of things if you are observant & use some methods like triangulation.

Supposing you have earmarked a group of participants for the survey & have questioned them about their knowledge about ACHs, their commitment to it in the positive ought to then prompt a string of questions about say PEACH (Pan European Automated Clearing Houses) which could talk about the pain the users seem to face in over the complex rules or the stringent procedures in gaining regulatory compliance.

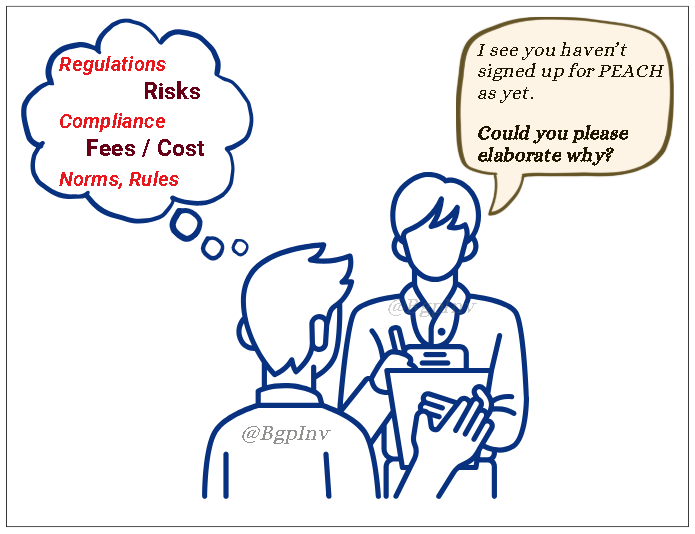

3) 1:1 User Interviews

Surveys are great at determining the overall trend or the generic mood of a given market. But that may not suffice as one may require a much detailed & a personalized opinion which calls for a sizeable window of time allotted towards unearthing a lot more about the pain points of the users.

Although one may moot the topic of logistic challenge debating about whether or not it is feasible to interview that many users, one ought to understand again that it isn’t about the numbers (quantity) but rather about the quality of responses here.

Over the previous section with the survey breaking into a detailed section as for the selective drilldown the user’s choice could be clear. But the motivation behind it may not be as it is still possible that the deviation could be 35-45+% if one happens to analyze them. To each their own. Hypothesizing this at a very simple & basic level - the reason why one person buys a piece of land may not really match with that of the other, although the underlying base could still be investment for the future, albeit distant future in some cases.

Again, getting back to our banking example, the reason why some user happens to be sitting on the wall as for not choosing PEACH could really be very diverse. For some it is the rules, for the others it could appear as quite a huge task wading through all those procedures needed to set-up when for some it could just be the perception of a major risk.

4) Respondent Education

This is one step that very few seem to understand the importance of. As one is floating those surveys one expects some degree of fairness over those responses from the participants, which is only but natural. One look at some of the survey responses & one may be right in thinking that some bit of training imparted to the participants could have been time well spent.

The perception most teams have is this step is not at all straightforward, it is pretty gruesome & could prove to be something akin to a dead investment because users tend to fill out surveys based on what they perceive as right. And again, fiddling too much with that perception could be dangerous as it could pry on the minds of the participants & could go on to induce some more bias.

Teaching the importance of thoughtfulness & mindfulness to the participants could help a great deal but one ought to exercise a lot of caution as well.